Deep Learning for Solving Math Problems

07.02.2023

This post presents a university project developed for the Deep Learning course exam, where we explored how deep learning models can be applied to answer mathematical questions. Our work started by analyzing the Mathematics Dataset described in prior research, which helped us understand the challenges and guided our experimental setup.

To establish meaningful comparisons, we implemented three different models:

- an LSTM network

- a standard Transformer

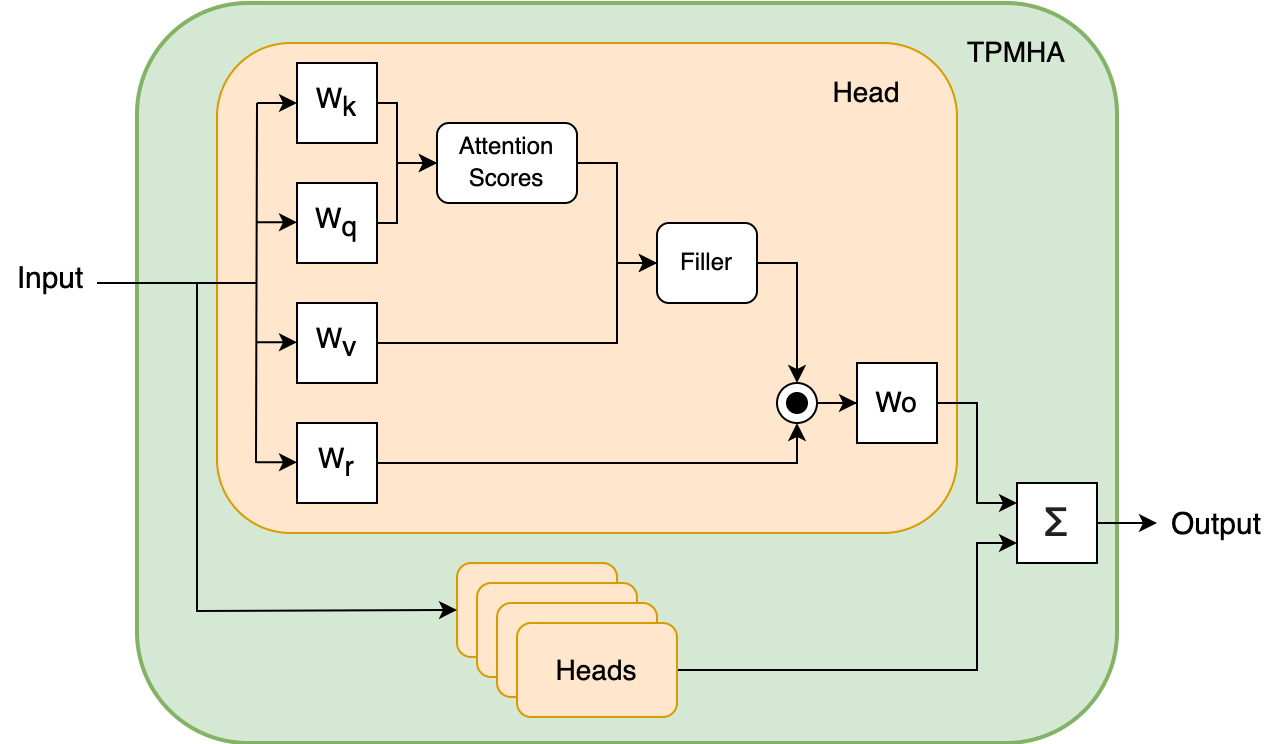

- and the Tensor-Product Transformer (TP-Transformer), the current state-of-the-art for this task.

The goal was to evaluate how different sequence-to-sequence architectures, based on diverse design strategies, perform on mathematical reasoning tasks.

Due to hardware limitations (we worked with the free version of Google Colab), we could not use the full dataset or run extensive training. Instead, we selected three modules ("numbers round," "calculus differentiate," and "polynomials evaluate") totaling around 6 million samples. This setup forced the models to learn multiple types of problems without specializing too narrowly.

Despite the limited resources, our experiments confirmed some key findings:

- Transformer-based models reached good accuracy faster than the LSTM, even though they required more computation per epoch.

- The TP-Transformer outperformed both the LSTM and standard Transformer, particularly on extrapolation tasks that require generalizing to unseen examples.

Although constrained by training time and compute power, this project clearly showed the potential of modern Transformer architectures for structured reasoning tasks like mathematics. We are confident that with more resources, the models - especially the TP-Transformer - would have achieved even stronger results.

Check out the GitHub repo for the full PDF report and the Colab notebook with the complete implementation.