The Illustrated Transformer

05.04.2024

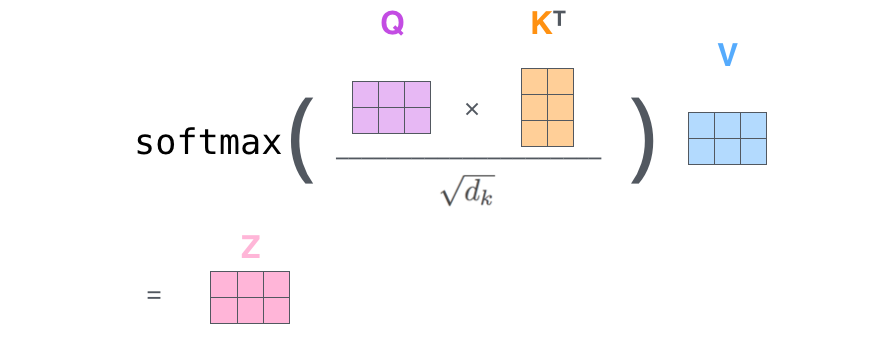

Transformers form the foundation of nearly all major models of AI text generation available today. Despite appearing as complex structures, the basic framework of a transformer combines numerous simple elements to construct a more intricate architecture. Moreover, the underlying mathematics relies on simple mathematical operations that can be easily understood when illustrated.

If you want to discover exactly how they work, I recommend this blog post which allowed me to fully capture the workings of Transformers!